|

|

|

|

Description This one week summer school belongs to the PSL-maths program of the PSL university. It is devoted to High dimensional probability and algorithms. The targeted audience is young and less young mathematicians, starting from the PhD level. The school will take place in ÉNS Paris, from July 1 to 5, 2019.

Titles and abstracts

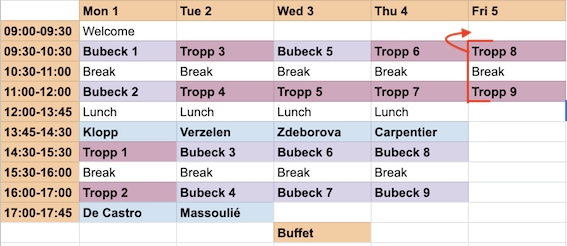

Schedule Registration Registration is free but mandatory. Some limited support is available for young participants. The deadline for registration is April 1, 2019. Registration may close before if the number of registered persons exceeds the capacity of the room. Organizers and sporsors This school is supported by PSL-maths, a program of the PSL university. It is additionally supported by CNRS. The organizers are Claire Boyer, Djalil Chafaï, and Joseph Lehec. The video capture was produced technically by Thierry Bohnke and Marianne Herve from OHNK. |